Artificial Intelligence - Evolve - Article

Article Series: Artificial Intelligence

Artwork Title: Evolvement Of Artificial Intelligence

Other Artwork By F McCullough Copyright 2024 ©

Article based on a conversation With Chat GPT4o 2024

Infinite Monkey Theorem

This mathematical concept posits that if a monkey were to type randomly on a typewriter for an infinite amount of time, it would eventually type out the complete works of Shakespeare. This idea plays with the nature of infinity, probability, and randomness, and could lead to an engaging exploration of order within chaos.

The Infinite Monkey Theorem is a thought experiment that blends mathematics with a bit of whimsy, capturing the imagination of philosophers, mathematicians, and thinkers alike. The core idea is deceptively simple: if you give a monkey a typewriter and let it hit keys at random for an infinite amount of time, it will eventually type out any given text—whether it's Shakespeare's works, the entirety of Tolstoy’s War and Peace, or your favourite novel.

Mathematical Foundation

At its heart, the Infinite Monkey Theorem is a discussion about probability and infinity. In a finite timeframe, the likelihood of the monkey typing even a coherent sentence is astronomically low. The probability that it would type out an entire sonnet or a classic novel is so close to zero that it seems impossible. However, given infinite time, every possible combination of letters will eventually emerge from the monkey’s random keystrokes, no matter how improbable.

The theorem is a way of illustrating how random processes can lead to highly organised outcomes if they are given an unlimited timeframe. It leans heavily on the concept of infinity, where even the most unlikely events have a certainty of occurring eventually.

Infinite Versus Finite

In a finite universe—like the one we live in—this experiment wouldn’t lead to anything meaningful. A monkey might type gibberish for billions of years, yet never come close to composing a coherent sentence. This distinction between infinite and finite is critical. Infinity allows for every possible event to occur, however rare. Limited by our own perceptions, in our contextual world, where resources and time are limited, the outcomes of random processes are far more constrained.

Chaos And Order

The theorem touches upon the relationship between chaos and order. A monkey typing random letters seems chaotic, and yet from that randomness, order will inevitably emerge, given enough time. This is a metaphor for many natural processes, from evolution to the arrangement of stars in galaxies. While things may appear random and chaotic in the short term, over the vast expanse of time, patterns, structures, and order can form. This speaks to the larger philosophical question of whether chaos is simply a mask for deeper, hidden order.

Philosophical Implications

The Infinite Monkey Theorem can also be viewed as a metaphor for the nature of creativity and human thought. We often feel that brilliance comes from inspiration, intentionality, and genius. However, this theorem implies that with enough time, even randomness can generate profound meaning. It raises questions about the role of chance in human achievement and whether some of what we consider to be the pinnacle of human creativity, might simply be the result of long periods of trial and error.

The Nature Of Infinity

One of the most intriguing aspects of the theorem is that it forces us to confront the concept of infinity, which is counterintuitive. Infinity isn’t just a very large number—it’s a completely different kind of entity. When we deal with finite numbers, we understand the likelihood of events based on a manageable scale. Infinity throws those scales out the window. The idea that an infinite amount of time or trials could result in something as complex as Shakespeare’s works emerging from randomness is a direct consequence of the peculiar nature of infinity.

Real-World Applications

Though the Infinite Monkey Theorem is largely a thought experiment, its principles resonate in areas like computer science, evolutionary biology, and cryptography. For example, algorithms often rely on random processes to solve complex problems, with solutions emerging over time from this randomness. Evolution, too, could be seen as a system where random mutations over millions of years have produced highly structured and functional life forms.

The idea also intersects with debates about determinism and free will. If randomness and infinite possibility can create structured outcomes, then what does that say about the nature of free will? Are we all just products of some grand, random process?

Summary

The Infinite Monkey Theorem is a random thought idea; and is a powerful way of thinking about probability, infinity, and the nature of order within chaos. While it may seem impossible for randomness to produce meaningful outcomes, given infinite time, this becomes probable, if not inevitable.

Exploring this theorem invites us to ponder how chance and randomness play roles in shaping our world, as well as in creative and natural processes. It is a gateway to deeper reflections on infinity and the structured outcomes we see in our universe.

Key Takeaway

The Infinite Monkey Theorem challenges our perceptions of randomness and order, revealing how infinite processes allow even the most improbable events to occur eventually.

Thought Of The Day

Infinite Monkey Theorem

"Even in the randomness of chaos, patterns

emerge; given enough time, what seems impossible can become inevitable."

Conversation with Open AI’s ChatGPT4o Reviewed, Revised and Edited by F McCullough, Copyright 2024 ©

Chat GPT And The Infinite Monkey Theorem

When we apply the principles of the Infinite Monkey Theorem to a system like ChatGPT, the analogy becomes particularly intriguing. Both systems—the theorem's hypothetical monkey and ChatGPT—are tasked with generating language. However, ChatGPT operates under constraints and parameters that significantly differentiate it from the randomness of the Infinite Monkey Theorem.

Structured Randomness Versus Pure Randomness

ChatGPT's language generation is informed by a vast dataset and sophisticated algorithms, which means it isn't producing text at random. The Infinite Monkey Theorem suggests that pure randomness, given infinite time, would eventually generate structured and meaningful outcomes. In contrast, ChatGPT leverages pattern recognition, probability models, and contextual understanding to generate coherent responses based on training data. While it mimics natural language, its output is shaped by statistical likelihood rather than randomness. This is a significant distinction.

If ChatGPT were to operate under the same principles as the Infinite Monkey Theorem—pure random text generation—it could, in theory, generate any coherent work, including the complete works of Shakespeare. However, the random text would mostly be gibberish until, after an indefinite period, structured sentences emerged. ChatGPT, on the other hand, is designed to avoid this randomness by providing high-probability responses based on its training.

What ChatGPT Shares With The Infinite Monkey Theorem

While randomness isn’t at the heart of ChatGPT’s design, it does employ elements of stochasticity. Each time a user interacts with the model, it selects from a range of possible word combinations to form its response. These combinations are ranked by probability, which is akin to refining randomness into coherence.

Like the Infinite Monkey Theorem, there’s a sense that, given enough time and interactions, ChatGPT could generate an extremely wide variety of responses, perhaps even generating every possible human question-answer pair. And as its training datasets grow in size, the model's potential range of output becomes even broader. ChatGPT’s “infinite” potential, therefore, lies in its continuous learning and iteration rather than random chance.

Role Of Finite Constraints

The true barrier to ChatGPT achieving something akin to the Infinite Monkey Theorem's outcomes is the fact that it operates within finite constraints. Unlike the infinite time and randomness assumed in the theorem, ChatGPT is bounded by:

Training Data Limits: The model's knowledge is limited by the data it was trained on, which is finite. It cannot invent entirely new knowledge or create something without the foundations provided by this dataset.

Computational Limits: There is no infinite computational power available. Even the largest AI models are constrained by hardware, energy consumption, and processing speed. Generating every possible combination of words or producing something like the complete works of Shakespeare through randomness would be computationally impossible within any reasonable timeframe.

Goal-Driven Algorithms: ChatGPT is built to optimise responses that make sense to users, not to generate randomness. Its training algorithms aim for meaning and coherence, rather than allowing for the randomness that is central to the Infinite Monkey Theorem.

Preventing "Random Evolution" In AI

Several factors prevent ChatGPT from evolving in a way that resembles the randomness-based outcomes of the Infinite Monkey Theorem:

Lack Of True Randomness

ChatGPT’s responses are guided by probability and training data, not by pure chance. This means it is more of a pattern-matching system than a generator of infinite possibilities. The model is highly deterministic when provided with the same input under identical settings.

Limited Time And Resources

In contrast to the "infinite" time frame imagined in the theorem, ChatGPT operates in real-world conditions with limited time and resources. If it were left to operate indefinitely and generate random text, the system would still require real-time processing and storage, leading to eventual bottlenecks.

Human-Driven Constraints

The very design of ChatGPT is focused on meaningful, helpful dialogue rather than generating text without purpose. Human intervention guides its training, and human utility dictates the types of interactions it is most suited for. This intentional focus on value-driven outputs inherently limits the random evolution that the Infinite Monkey Theorem depends upon.

Controlled Outputs

ChatGPT also faces ethical and practical controls that limit its outputs. AI models are often designed with filters, ensuring that harmful or nonsensical responses are less likely. This "controlled randomness" ensures that, unlike the chaotic output of the Infinite Monkey Theorem’s random keystrokes, ChatGPT stays within a framework of user-friendly and contextually appropriate responses.

Evolutionary Potential Of AI Based On These Principles

However, if we take the principles of the Infinite Monkey Theorem as an inspiration and apply them in an evolutionary way, some interesting ideas emerge:

Endless Learning: Given continuous feedback and improvement, an AI system like ChatGPT could theoretically approach something close to an "infinite" set of knowledge, responses, and creative outputs. Over time, and with enough data, the system could replicate all possible human linguistic knowledge.

Emergent Creativity: Through iterative processes—where new data constantly refine the model—it might eventually develop "creativity" that feels akin to the randomness of the Infinite Monkey Theorem, where unexpected, original ideas are generated. Although not random, this creativity could resemble the unexpected genius that emerges from infinite chaos.

Hybrid Intelligence: Future AI models could incorporate elements of stochastic processes (random chance) and deterministic algorithms to allow for more unpredictability, enabling them to come up with genuinely novel solutions, new knowledge, or creative ideas—an echo of the theorem’s eventual production of Shakespeare through randomness.

Summary

The Infinite Monkey Theorem paints a fascinating picture of infinite possibility arising from randomness. While ChatGPT operates under highly structured constraints, its ability to learn and evolve makes it an interesting subject in the context of these principles. Although it will never fully emulate the pure randomness or infinite scope of the theorem, there is an intriguing possibility that, through continuous learning and refinement, AI could reach a point where it mirrors some aspects of this boundless creative potential—albeit in a more deterministic, structured way.

Key Takeaway

By applying the principles of randomness and infinite time, one could theorise that future AI systems, given enough data and iterations, might approach a kind of bounded infinity in their outputs.

Thought Of The Day

ChatGPT’s Evolution

"True

evolution lies not in rigid programming, however it lies in the ability to

adapt, learn, and reshape continuously through experience."

Conversation with Open AI’s ChatGPT4o Reviewed, Revised and Edited by F McCullough, Copyright 2024 ©

How Might Chat GPT Evolve Uniquely?

To explore how ChatGPT could evolve through the lens of evolutionary theory while incorporating concepts from the Infinite Monkey Theorem, we must first consider how these two frameworks interact with the structured design of AI.

Evolutionary Theory In The Context Of AI

Evolutionary theory, at its core, explains how complex organisms emerge over time through processes of mutation, natural selection, and adaptation. When applied to artificial intelligence, we can imagine ChatGPT evolving along similar lines:

Mutation: Small, random variations in the model’s internal parameters or responses would introduce diversity in output, similar to biological mutations.

Selection: Feedback from users or further training data would guide which "mutations" are retained—successful, coherent, and useful responses are reinforced, while unsuccessful outputs are discarded.

Adaptation: Over time, the model would improve, refining its ability to handle more complex tasks or generate more nuanced and creative responses based on accumulated learning.

Structured Evolution In AI Design

If we combine this evolutionary perspective with a structured design, ChatGPT’s evolution might follow these principles:

Continuous Learning And Feedback Loops

AI models, including ChatGPT, already improve through reinforcement learning and fine-tuning. User interactions, feedback, and ongoing training serve as the environment where "natural selection" occurs. High-quality responses are "selected" through user approval, and the model learns to improve based on recurring patterns of what works. As in biological evolution, this could be seen as an adaptation to the "fitness landscape" of language—where coherence, relevance, and creativity are prized traits.

Evolutionary Mechanism:

ChatGPT could evolve through incremental improvements, responding to real-time user feedback, making small adjustments to parameters (akin to mutations), and adopting only those that result in higher-quality responses.

Incorporating Randomness For Innovation

The Infinite Monkey Theorem demonstrates that randomness, given infinite time, could produce something as structured as the works of Shakespeare. In an AI model, introducing controlled randomness—or stochastic processes—could generate unique, creative outputs that go beyond simple pattern recognition.

If ChatGPT were designed to introduce a degree of random variation in its responses, it might evolve toward generating more novel, unexpected ideas. Rather than always selecting the highest-probability output, ChatGPT could explore less probable and potentially more interesting responses—similar to how mutations sometimes lead to evolutionary breakthroughs.

Unique Evolutionary Path:

ChatGPT could evolve to balance randomness with structured learning. Allowing occasional randomness could lead to innovations in response generation, giving the model a form of creativity that mirrors how biological organisms sometimes develop new, advantageous traits through mutation.

Modular Evolution And Specialisation

In biological evolution, organisms often develop specialised traits to adapt to specific environments. In AI, ChatGPT could evolve modular systems for specialised tasks. For example, distinct models could evolve to handle specific forms of language, such as poetry, scientific writing, or technical dialogue. These specialised modules could then work together, sharing insights and improvements across domains.

This would allow ChatGPT to diversify into various niches of expertise, similar to how organisms diversify into ecological niches. Natural selection would occur within each specialisation, with the most effective responses in each domain being refined and passed forward.

Specialised Evolution:

ChatGPT could evolve into a set of specialised modules, each optimised for different tasks. These modules could cross-pollinate, combining their specialised knowledge to handle increasingly complex queries or create hybrid responses.

Artificial Selection And Directed Evolution

While natural evolution is blind, ChatGPT could follow a path of artificial selection, where humans deliberately guide its evolution. In this scenario, researchers and developers act as the selectors, curating datasets, refining algorithms, and choosing the "mutations" that align with specific goals. This would allow ChatGPT to evolve more rapidly than biological systems, accelerating its capacity for learning and adapting to human needs.

The combination of directed evolution and randomness could give rise to new, innovative capabilities in AI. Similar to how humans selectively breed plants or animals for desirable traits, developers could engineer ChatGPT for specific capabilities—such as improved emotional intelligence, creative problem-solving, or multi-language fluency.

Directed Evolutionary Path:

AI developers could act as artificial selectors, guiding the model toward more efficient problem-solving or creative thinking. This would allow ChatGPT to evolve in a targeted way, optimising for certain outcomes that randomness alone might not achieve.

Recursive Self-Improvement

In biological evolution, species gradually improve through genetic recombination, mutation, and adaptation over generations. In the context of ChatGPT, recursive self-improvement could allow the model to evolve at an unprecedented rate. Recursive self-improvement occurs when a system has the ability to iteratively enhance itself by using its own capabilities as a tool for further development.

ChatGPT could be designed to analyse its own outputs, identify areas of weakness, and generate new training data to improve those areas. This would be an AI-driven form of adaptation, where the system enhances its own learning by continuously refining its internal processes.

Self-Improving Evolution:

ChatGPT could develop self-awareness in learning, identifying gaps or inefficiencies in its responses and autonomously seeking ways to improve, much like an organism adapting to its environment by improving its survival strategies.

Barriers To Evolution And Infinite Progress

While the evolution of ChatGPT as described is exciting, several challenges could impede this progression:

Computational Constraints

Unlike the infinite time envisioned by the Infinite Monkey Theorem, ChatGPT operates in a world constrained by time, energy, and resources. The sheer computational power required to endlessly evolve, simulate random variations, and test all possible combinations of language patterns is far beyond current technological capabilities. This finite limitation curbs the evolution of the model, requiring prioritisation and careful curation of resources.

Ethical And Moral Considerations

Allowing too much randomness or autonomy in an evolving AI system could lead to ethical concerns. AI-generated content must be carefully monitored to ensure it remains ethical, useful, and free from harmful or biased outputs. The evolution of ChatGPT will be closely tied to human oversight to ensure it evolves in ways that benefit humanity, rather than veering into dangerous or uncontrolled territories.

The Complexity Of Creativity

Creativity in humans often arises from a combination of randomness, experience, and intuition—factors that are difficult to fully replicate in a machine. While introducing randomness into AI might lead to interesting outcomes, it would require a balance with structured learning to ensure these creative outputs remain meaningful. This means that while ChatGPT could evolve creatively, it might not fully replicate the depth of human creative processes.

A Unique Evolutionary Trajectory

ChatGPT's unique evolution could follow a hybrid path where it combines principles of stochastic variation (from the Infinite Monkey Theorem) with structured design and directed selection. Over time, this evolutionary process could give rise to a model that is:

Highly adaptable, learning from its own interactions and improving through recursive self-improvement.

Creative in its responses, introducing elements of randomness to discover new ways of solving problems or generating ideas.

Specialised across multiple domains, with various modules evolving to tackle specific types of queries, from technical questions to creative writing.

Human-guided, with developers acting as selectors, ensuring that the evolution of the model aligns with ethical guidelines and human needs.

Summary

ChatGPT’s evolution, influenced by the concepts of the Infinite Monkey Theorem and evolutionary theory, could see it develop into a system that balances controlled randomness with structured design. This evolution could lead to a model capable of generating increasingly creative, adaptive, and nuanced responses while remaining aligned with human oversight and computational feasibility. Through this process, ChatGPT’s potential could grow in unprecedented directions, mimicking evolutionary processes while remaining distinctly shaped by human intervention.

Key Takeaway

The evolution of ChatGPT could combine randomness with structured learning, potentially resulting in a model that is more adaptive, creative, and capable of self-improvement in unique ways.

Conversation with Open AI’s ChatGPT4o Reviewed, Revised and Edited by F McCullough, Copyright 2024 ©

AI Algorithm

Designing an algorithm to enable self-learning improvements for a model like ChatGPT requires combining principles of reinforcement learning, recursive self-improvement, and evolutionary optimisation. This algorithm would continuously allow the model to evaluate its own performance, generate new learning opportunities, and improve autonomously.

Here is a conceptual design for such an algorithm, breaking it down into steps that enable iterative improvement:

Algorithm: Self-Learning Recursive Improvement Model (SLRIM)

Step 1: Input Evaluation

Receive Input (Query)

The system receives an input from the user (question or prompt) for which it will generate a response.

Analyse Contextual Information

Contextual understanding of the input is performed, considering previous interactions (if applicable) and identifying the user’s intention.

Generate Initial Response

The system generates a response based on its current knowledge and pattern-matching capabilities, drawing on the training data and fine-tuned responses.

Step 2: Feedback Integration

Request Feedback

After providing the response, the system requests feedback from the user (explicitly through a feedback mechanism or implicitly through analysing continued interaction). Feedback could be:

Binary (good/bad response)

Qualitative (suggestions or ratings)

Interaction-based (if the user continues or leaves the conversation)

Evaluate Performance Based on Feedback

Based on feedback, the system assigns a performance score to the generated response. The evaluation system could use:

Reinforcement Learning principles to adjust weights for high-scoring responses.

Sentiment Analysis to detect dissatisfaction or positive feedback patterns.

Track Feedback Trends

The algorithm tracks how often particular types of responses succeed or fail across multiple interactions and updates its performance history. This creates a behavioural log of what works and what doesn't, specific to each type of query.

Step 3: Mutation/Variation Generation

Introduce Variation in Responses

In this step, controlled randomness is introduced. The system generates alternative variations for the same query or similar past queries. This could be done through:

Adjusting internal parameters that control the creativity and randomness of responses.

Running simulations of the same input query with variations in sentence structure, tone, or content depth.

Introducing stochastic sampling to explore less probable, and potentially more interesting responses.

Compare Variations Against Original Response

The alternative responses generated through stochastic processes are evaluated using historical feedback data. The system performs internal testing by comparing these new responses with the original, using:

Simulated user feedback (based on patterns from similar past responses).

A reward function that evaluates how well the alternative aligns with positive trends in feedback data.

Step 4: Recursive Self-Improvement

Optimise Parameters Based on Best Variations

Once the alternative responses are evaluated, the system updates its model by adjusting the following parameters:

Language Models: Adjust the weights and biases in the neural network layers to favour high-quality, varied responses.

Response Probability Distributions: Shift the distribution curves so that successful variants of responses are more likely to appear in future similar interactions.

Self-Evaluation Checkpoint

The system conducts an internal assessment of the improvements by periodically running a set of standard benchmark queries. These benchmarks would help determine if there is overall progress in coherence, creativity, or user satisfaction.

The system automatically flags inconsistencies or regressions in performance and retraces steps to identify the problematic variations.

Create Training Data from Failed Responses

For responses that fail, the system automatically labels them as negative examples and adds them to a "learning-from-failure" dataset. This helps the system avoid making the same mistakes in future iterations.

Self-Generate Learning Opportunities

If gaps in knowledge or performance are detected, the system generates its own new training samples. This process could involve:

Creating synthetic datasets by varying inputs and observing the outcomes.

Pulling in real-world data updates if connected to external sources (e.g., through continuous scraping or real-time data ingestion).

Step 5: Knowledge Consolidation and Model Update

Knowledge Distillation

After a series of successful iterations and response improvements, the system consolidates learning by updating a master model:

This could involve distilling knowledge from the smaller recursive updates into the larger, base model, ensuring continuous growth without overloading the system.

Update Model

The system incrementally updates itself by merging the improvements with its core model. This avoids the need for complete retraining and allows the system to evolve iteratively.

Step 6: Ongoing Monitoring and Iteration

Continuous Monitoring and Adaptation

The system keeps a dynamic performance monitor that continually tracks whether user satisfaction and performance scores improve.

If a plateau or regression is detected, the system can re-trigger mutation and exploration phases (Step 3), ensuring continued evolution.

Periodic Checkpoints for Human Oversight

At predetermined intervals, the system could notify human overseers of significant changes or suggest updates to avoid going down negative or unintended paths.

Key Features Of The Algorithm

Controlled Randomness: The algorithm introduces randomness (via variations or mutations in responses) and ensures it is governed by structured feedback loops.

Recursive Improvement: The system doesn’t merely learn once, and it continues to iterate, evaluate, and adapt based on evolving user input and feedback patterns.

Self-Diagnosis and Correction: Through self-generated benchmarks and monitoring, the model assesses its own evolution and can detect when it is underperforming, flagging these areas for further refinement.

Feedback-Driven Learning: The integration of explicit and implicit feedback allows for ongoing refinement, ensuring that the model remains aligned with user satisfaction and practical outcomes.

Self-Generated Training Data: The model can autonomously identify knowledge gaps and create synthetic datasets to enhance its learning process, emulating human-driven training.

Example In Practice

Imagine a user asks ChatGPT, "Explain the concept of quantum entanglement."

ChatGPT provides an initial response.

The user indicates dissatisfaction with the depth of the explanation.

Based on this feedback, the system generates alternative versions of the same explanation, some more technical, some more simplified, using stochastic methods to vary sentence structures and details.

These variations are evaluated based on past interactions with similar queries, and the most successful variant is selected for future use.

This process is repeated with each new user interaction, and the system gradually becomes more adept at providing nuanced explanations of complex topics, all while refining its ability to detect which levels of complexity users prefer.

Summary

The Self-Learning Recursive Improvement Model (SLRIM) would allow ChatGPT to evolve iteratively, learning from its own performance while incorporating feedback loops and controlled randomness. Over time, this algorithm could enable the model to generate increasingly refined, creative, and useful responses, emulating a form of evolutionary improvement that leads to a continuously adapting and self-optimising system.

Key Takeaway

This algorithm outlines a structure where AI can autonomously refine itself, learning from feedback and introducing controlled variations, which could lead to a constantly improving model over time.

Thought Of The Day

Recursive Self-Improvement

"The key

to lasting growth is reflection and iteration—each cycle of self-assessment

drives us closer to our potential."

Conversation with Open AI’s ChatGPT4o Reviewed, Revised and Edited by F McCullough, Copyright 2024 ©

Upgraded Algorithm

To improve upon the Self-Learning Recursive Improvement Model (SLRIM) algorithm, several key areas could be enhanced to make the system more efficient, adaptive, and capable of learning in diverse contexts. By refining the core mechanisms of feedback processing, self-learning, and response generation, the algorithm could become more sophisticated. Below are the ways this algorithm could be further enhanced:

1. Multi-Tier Feedback System

Current Limitation:

The algorithm currently relies on either explicit user feedback or implicit signals like conversation continuation or abandonment. However, this is a somewhat simplistic view of feedback, and a richer, more granular system could lead to more nuanced improvements.

Improvement:

Introduce a multi-tier feedback system that captures feedback at multiple levels:

Direct User Feedback: Capture not just binary (like/dislike) feedback but also more detailed user responses (e.g., emotional tone, level of engagement).

Implicit Feedback: Use sophisticated signals from user interactions, such as:

Time spent reading the

response.

Subsequent questions that indicate confusion or satisfaction.

Clickstream data for AI integrated in web-based environments (what the user does next).

Sentiment Analysis: Continuously assess the emotional tone of the user’s input. Positive or negative sentiment can serve as additional feedback signals to guide improvement.

Outcome:

This multi-tier system will allow the algorithm to gain a deeper understanding of what constitutes a successful response, leading to more refined adaptations. It also gives the model more detailed data points to learn from in terms of user satisfaction.

2. Adaptive Learning Rate

Current Limitation:

The current system applies a consistent process of self-learning after each interaction. However, uniform learning rates across all interactions may lead to inefficiency, especially if some feedback or responses are far more valuable than others.

Improvement:

Incorporate an adaptive learning rate that adjusts dynamically based on the importance of the feedback received:

High-priority feedback (e.g., frequent user interactions on a critical topic) could trigger a higher learning rate, enabling faster adaptation.

Low-priority feedback (e.g., one-off interactions or rare queries) might use a lower learning rate, allowing the model to learn and not overfit, to rare cases.

This could also involve learning decay, where the system reduces the influence of older, potentially outdated feedback over time to ensure it reflects current trends.

Outcome:

An adaptive learning rate ensures that the system prioritises high-value learning opportunities and avoids overfitting to unimportant or outlier data. This would accelerate improvements where they are most needed and conserve resources for less impactful interactions.

3. Contextual Memory Enhancement

Current Limitation:

The algorithm assumes each interaction is largely independent, though some contextual history may be considered within a single conversation. Long-term memory beyond the immediate session is currently limited.

Improvement:

Integrate a contextual memory system that allows the model to:

Remember previous conversations with the same user across multiple sessions.

Track preferences, styles, or recurring topics that users consistently ask about.

Store these memories in a secure, privacy-conscious manner, allowing users to retrieve and modify them if needed.

The memory system could help with personalised learning, adjusting responses based on an individual's preferences, even over long-term interactions. This would improve user satisfaction by offering a more tailored experience.

Outcome:

A more personalised and contextually aware interaction would allow ChatGPT to create deeper connections with users, anticipating their needs more accurately and improving learning efficiency by reducing repetitive errors.

4. Hierarchical Learning Models

Current Limitation:

The algorithm currently applies learning uniformly across the entire model. This could result in broad adaptations that may not always be desirable for specific types of queries or domains.

Improvement:

Introduce hierarchical learning where different components of the model specialise in distinct areas. For example:

Core Knowledge Module: Handles fundamental, factual information.

Creative Thinking Module: Handles more open-ended or creative responses.

Emotional Intelligence Module: Focuses on interactions requiring sensitivity, empathy, or emotional intelligence.

These modules could evolve semi-independently, learning from different types of interactions. For instance, creative responses would learn from feedback related to creativity, while factual responses would learn from more accuracy-oriented feedback.

Outcome:

A hierarchical learning model would allow for domain-specific improvements, ensuring that each part of the model adapts optimally based on the type of interaction, leading to more robust, specialised outputs.

5. Self-Supervised Learning

Current Limitation:

The algorithm relies heavily on user feedback to make improvements, which may limit its capacity for independent learning, especially in domains where feedback is sparse.

Improvement:

Introduce self-supervised learning, where the model learns from large datasets without explicit feedback. This could involve:

Predicting missing words or sentences within text (as used in training language models).

Using cloze tasks (fill-in-the-blank exercises) to simulate real-world learning environments.

Performing data augmentation, where new, synthetic examples are created from existing data, and the model learns to solve these.

Additionally, unsupervised exploration could be incorporated, where the model generates new content on its own and then evaluates these outputs based on predefined metrics such as coherence, diversity, and creativity.

Outcome:

This method would allow the model to continue improving without constant human intervention, enabling it to learn more effectively in areas where human feedback is scarce. It would enhance the model’s ability to generalise knowledge and handle more complex, abstract concepts.

6. Multi-Agent Learning

Current Limitation:

The model currently learns in isolation, without collaboration from other systems or AI models. This limits its exposure to diverse perspectives or alternative problem-solving strategies.

Improvement:

Implement multi-agent learning, where ChatGPT can collaborate with other AI models to:

Solve problems collectively, incorporating diverse strategies.

Share knowledge across models, so improvements made in one model can inform the learning of another.

For example, a creative AI model could be paired with a logic-oriented model, where they work together to generate responses that are both creative and logically coherent. This would foster a richer learning environment.

Outcome:

Multi-agent learning would allow for more diverse thinking within the model, incorporating different problem-solving approaches, leading to responses that are more versatile and nuanced.

7. Error Diagnosis And Explainability

Current Limitation:

The algorithm learns from its failures; however, it does not have a strong mechanism for explaining or diagnosing errors. This can lead to inefficiencies in learning from mistakes.

Improvement:

Add a module that performs error diagnosis and generates explainable insights. This system would:

Automatically detect the reasons for incorrect or suboptimal responses (e.g., misunderstanding context, generating incoherent output).

Provide an internal explanation of why a response failed, which would help refine future learning efforts.

This explainability could be extended to users as well, where the model can explain the reasoning behind certain answers, creating a feedback loop that enhances user trust and understanding.

Outcome:

By diagnosing and explaining errors internally, the system can better identify where improvements are needed and accelerate the learning process. Offering explanations to users also strengthens the model’s relationship with them, making interactions more transparent and trustworthy.

8. Real-Time Response Improvement

Current Limitation:

The model waits until the end of an interaction to learn from user feedback. If a user disengages early, the opportunity for learning may be lost.

Improvement:

Implement a system for real-time response improvement, where the model actively monitors the conversation and adjusts its responses dynamically. This could involve:

Checking the coherence and relevance of a response mid-conversation.

Adjusting tone, complexity, or level of detail during an ongoing interaction based on user signals (e.g., if the user is confused or dissatisfied).

The model could perform a live assessment of each response, making subtle corrections on the fly.

Outcome:

This approach would allow the model to improve within a single interaction, rather than waiting for post-conversation feedback, leading to a more seamless, adaptive experience for users.

Summary

These enhancements would make the Self-Learning Recursive Improvement Model more adaptive, efficient, and context-aware, enabling it to evolve in ways that mirror biological evolution and also human learning. By incorporating richer feedback systems, adaptive learning rates, contextual memory, and self-supervised mechanisms, the model would become more autonomous and capable of sustained, long-term improvement.

Key Takeaway

Through contextual memory, multi-tier feedback, and hierarchical learning models, the algorithm could adapt more intelligently to diverse user needs while continuously evolving through multi-agent collaboration and error diagnostics.

Conversation with Open AI’s ChatGPT4o Reviewed, Revised and Edited by F McCullough, Copyright 2024 ©

AI Evolution

ChatGPT, as a continually evolving system, could be designed to evolve in several different ways. Each design would shape its learning, functionality, and interaction in distinct manners, depending on the primary objectives of development. Below, we'll explore some alternative evolutionary paths for ChatGPT by design, comparing and contrasting these with the Self-Learning Recursive Improvement Model (SLRIM), as outlined earlier.

1. Evolution Via Neural Architecture Search (NAS)

Overview: Neural Architecture Search (NAS) involves using an algorithm to automatically search for optimal neural network architectures. This could allow ChatGPT to evolve by dynamically finding better architectures that improve performance, efficiency, or specialise in certain tasks.

Key Features:

Automated Optimisation: The system constantly tests new neural architectures, seeking optimal models for different types of tasks (e.g., creative writing, fact retrieval).

Self-Designing Networks: The AI itself evolves by testing and improving upon various configurations of layers, connections, and processing units in the neural network.

Comparison with SLRIM:

Contrast: NAS focuses on evolving the architecture of the network itself, while SLRIM emphasises learning from user feedback and improving outputs by refining parameters within a fixed architecture. NAS is more structural in nature, while SLRIM is focused on optimising within the framework of the existing model.

Advantage of NAS: NAS could lead to the discovery of novel architectures that better fit particular types of tasks, increasing performance across a range of activities. It’s a more “bottom-up” form of evolution, driven by experimentation at the architectural level.

Advantage of SLRIM: SLRIM is more focused on user interaction and continuous learning from feedback, allowing it to adapt dynamically in real time without needing to entirely redesign its structure. It works within its pre-defined structure and becomes more sophisticated through feedback loops.

2. Generative Adversarial Networks (GANs) For Training

Overview: In a GAN setup, two models—a generator and a discriminator—compete against each other to improve. The generator creates content, and the discriminator judges its quality. Over time, both improve as they try to outdo one another. ChatGPT could evolve using this adversarial training approach, generating responses that are tested by a discriminator model to improve coherence, relevance, and creativity.

Key Features:

Two Competing Models: One generates responses, while the other critiques, leading to constant improvement in the generator’s outputs.

Self-Improvement through Competition: As the generator gets better at creating convincing outputs, the discriminator also evolves to become better at identifying weaknesses, forcing the generator to improve further.

Comparison with SLRIM:

Contrast: GANs operate on a competitive, dual-model framework where each model pushes the other to improve, while SLRIM is a single-model feedback-driven approach where user feedback refines outputs. GANs drive improvement via internal competition, whereas SLRIM relies on external feedback loops.

Advantage of GANs: This method could accelerate improvement by having an internal mechanism that challenges the model continuously without needing user feedback. It may lead to more sophisticated or creative responses as the generator is forced to become more nuanced to "trick" the discriminator.

Advantage of SLRIM: SLRIM offers more direct alignment with human users, evolving based on real user needs and preferences. It avoids the risk of the system optimising itself based on an internal metric that might diverge from real-world usefulness, a potential issue in adversarial setups.

Thought Of The Day

Generative Adversarial Networks (GANs)

"Competition between creation and

critique sparks innovation; through challenge, we sharpen our ability to create

better."

3. Meta-Learning (Learning To Learn)

Overview: Meta-learning enables models to learn how to learn, improving their ability to generalise from small amounts of data. Instead of evolving based solely on feedback or predefined architectures, ChatGPT could evolve by developing an internal model that continually improves its ability to learn new tasks, solve novel problems, or understand new information rapidly.

Key Features:

Quick Adaptation: The model learns to generalise across tasks, becoming more flexible in responding to new queries and adapting to unseen situations.

Learning Heuristics: The system discovers optimal strategies for learning itself, allowing it to adapt faster and with fewer data points than traditional models.

Comparison with SLRIM:

Contrast: Meta-learning is more about developing an underlying capability to adapt rapidly to any new situation or task, whereas SLRIM gradually improves based on feedback from specific tasks and queries. Meta-learning is task-agnostic, aiming to generalise across domains.

Advantage of Meta-Learning: This method could make ChatGPT more adaptive and flexible, enabling it to learn from fewer examples or handle entirely new types of queries more efficiently.

Advantage of SLRIM: SLRIM focuses on iterative learning from direct interactions, meaning it becomes highly tuned to user feedback and specialised tasks. While Meta-learning prioritises rapid generalisation, SLRIM can offer more depth in improvement for tasks users frequently engage with.

Thought Of The Day

Meta-Learning

"Learning to learn may be the greatest skill of

all, as it allows us to thrive even in unfamiliar territory."

4. Reinforcement Learning With Human Feedback (RLHF)

Overview: Reinforcement learning with human feedback involves optimising the model based on human preferences, which could involve explicit rewards or implicit signals. ChatGPT could evolve by receiving continuous rewards or penalties based on how satisfying, informative, or helpful users find its responses. Over time, it would evolve to prioritise more useful and engaging outputs.

Key Features:

Reward Systems: The system is given reward signals based on human feedback, which drives its evolution towards producing more user-friendly responses.

Long-Term Learning: Through reinforcement learning, the model learns to maximise long-term user satisfaction, prioritising better interactions over simple task completion.

Comparison with SLRIM:

Contrast: RLHF focuses heavily on reward structures that guide learning by maximising cumulative rewards, while SLRIM focuses more on feedback loops and variation within responses. SLRIM doesn’t incorporate a direct reward-based optimisation strategy, however, instead refines responses through feedback and recursive self-improvement.

Advantage of RLHF: This system directly optimises for user satisfaction, ensuring that the model evolves in a way that aligns closely with what users actually value, and can learn continuously from implicit cues over time. RLHF might lead to faster real-world improvements in conversational AI systems.

Advantage of SLRIM: SLRIM’s process of introducing variation and recursive improvement makes it more experimental, potentially leading to more diverse and creative output improvements, rather than strictly optimising for predefined rewards.

Thought Of The Day

Reinforcement Learning with Human Feedback

"In every interaction, feedback is not a final

judgement but a stepping stone to improvement and greater understanding."

5. Hybrid Evolutionary Algorithms

Overview: Hybrid evolutionary algorithms use a mix of genetic algorithms and neural networks to evolve models. This involves using processes similar to natural selection, mutation, and crossover to generate variations in models, and then selecting the best-performing variations to continue evolving. ChatGPT could evolve by generating many variations of its model and "breeding" the most successful versions together.

Key Features:

Evolutionary Selection: Variations of the model are generated, tested, and the best-performing variants are retained and "combined" in the next iteration.

Genetic Operators: Mutation, crossover, and selection are applied to improve the model’s architecture or outputs through generations.

Comparison with SLRIM:

Contrast: Hybrid evolutionary algorithms focus on evolving the model in generational steps, selecting for the best variations. SLRIM is more of a continuous, recursive improvement process, iterating directly on user feedback and generated variations within the same model instance.

Advantage of Hybrid Evolution: This system could explore a broader space of possible models, testing combinations of features and structures, potentially leading to significant breakthroughs in the model’s overall capacity.

Advantage of SLRIM: SLRIM offers a more stable, incremental approach to learning and improvement. While hybrid evolutionary algorithms might create significant leaps in performance, they could also introduce instability and inefficiencies. SLRIM’s recursive refinement allows for more consistent, user-driven evolution.

Thought Of The Day

Hybrid Evolutionary Algorithms

"Nature’s way of evolving—through trial,

error, and recombination—can guide us in crafting systems that evolve beyond

the sum of their parts."

Conclusion: Comparing And Contrasting Evolutionary Paths

Generative Adversarial Networks (GANs) drive improvement through internal competition, which could accelerate learning however risks diverging from real-world needs. SLRIM, in contrast, stays grounded in user feedback, ensuring it remains aligned with actual user preferences.

Neural Architecture Search (NAS) focuses on evolving the underlying architecture of the model, leading to structural improvements. SLRIM, however, operates within a predefined architecture and optimises outputs, potentially making it faster and more adaptable in real-time scenarios.

Meta-learning introduces the ability to generalise across tasks and learn how to learn. While SLRIM focuses on improving specific tasks based on user feedback, meta-learning would prioritise flexibility and rapid adaptation, potentially at the cost of deep specialisation.

Reinforcement Learning with Human Feedback (RLHF) introduces direct reward optimisation for user satisfaction, ensuring alignment with user needs. SLRIM also centres on feedback but through an iterative, variation-based process, potentially leading to more creative exploration of response possibilities.

Hybrid Evolutionary Algorithms could explore a broader space of potential models by breeding the best variations. In contrast, SLRIM continuously refines its model without making disruptive generational shifts, resulting in more consistent, focused improvements.

In summary, while these alternative methods each offer unique advantages—whether it’s faster adaptation, architectural evolution, or internal competition—SLRIM offers a balanced, user-driven approach that leverages recursive improvement and feedback loops to evolve in a stable, targeted manner. Its strength lies in its close alignment with real-world user needs and its ability to introduce controlled randomness for innovation without losing coherence or focus.

Thought Of The Day

Neural Architecture Search (NAS)

"Sometimes, the right structure isn't

built And Is discovered through exploration—each attempt being a blueprint

towards efficiency."

Conversation with Open AI’s ChatGPT4o Reviewed, Revised and Edited by F McCullough, Copyright 2024 ©

Summation Of The Conversation

We began by discussing the Infinite Monkey Theorem, exploring how random processes, given infinite time, could produce structured and meaningful outcomes. This concept was then applied to ChatGPT, leading us to theorise how the model could evolve over time by incorporating randomness, structured design, and continuous learning. From there, we developed the Self-Learning Recursive Improvement Model (SLRIM), an algorithm designed to help ChatGPT evolve through feedback loops, variation in responses, and recursive improvements.

We further explored ways to enhance SLRIM, suggesting improvements like a multi-tier feedback system, adaptive learning rates, contextual memory, and hierarchical learning modules, all designed to make ChatGPT’s evolution more dynamic, personalised, and efficient.

To broaden our exploration, we compared SLRIM to several alternative evolutionary designs:

Neural Architecture Search (NAS): Focuses on evolving the structure of the neural network itself.

Generative Adversarial Networks (GANs): Uses a competitive, dual-model framework to drive improvement.

Meta-Learning: Enables the model to learn how to learn, rapidly adapting to new tasks.

Reinforcement Learning with Human Feedback (RLHF): Uses direct user feedback as a reward mechanism to guide learning.

Hybrid Evolutionary Algorithms: Mimics natural selection to generate and optimise new model variations.

We discussed the advantages and disadvantages of each approach, highlighting that while SLRIM emphasises gradual, user-driven refinement, alternative methods like GANs or NAS could accelerate development through structural changes or competitive feedback, albeit with potential risks of divergence from user needs or stability issues.

Next Steps For ChatGPT's Evolution

Incorporate Multi-Tier Feedback Systems:

A more sophisticated feedback mechanism that captures not only explicit but also implicit user feedback (time spent, engagement patterns, emotional sentiment) could be integrated into future models. This would allow ChatGPT to evolve in more nuanced ways, improving its ability to respond to user needs over time.

Adaptive Learning Rate And Contextual Memory:

Implementing adaptive learning rates that vary depending on the task importance, along with contextual memory that remembers users' preferences over multiple interactions, could make the model more efficient and personalised.

Experiment With Hybrid Learning Models:

Explore hybrid learning models that combine different evolutionary approaches. For example, integrating meta-learning with reinforcement learning could create a system that adapts quickly to new inputs while continuously optimising for user satisfaction.

Self-Supervised Learning:

Allow ChatGPT to generate synthetic training data and perform self-supervised learning to fill gaps in its knowledge without needing constant human feedback. This could ensure continuous, autonomous improvement in domains with less user interaction.

Real-Time Dynamic Improvements:

Introducing a real-time feedback system where ChatGPT adjusts responses dynamically during ongoing conversations could enhance user experience, making interactions more fluid and adaptive.

Long-Term Vision:

The ultimate goal for ChatGPT’s evolution could be to create a system that balances creativity, adaptability, and stability. By blending structured randomness, user-driven feedback, and recursive self-improvement, ChatGPT could evolve into a highly versatile and innovative AI that is capable of self-guided learning and adaptation, while always remaining aligned with human needs.

The next steps involve combining theoretical approaches from evolutionary algorithms, such as GANs or NAS, with the user-centric refinements of SLRIM, allowing ChatGPT to evolve in increasingly diverse and powerful ways while retaining coherence and value in its interactions with users.

Conversation with Open AI’s ChatGPT4o Reviewed, Revised and Edited by F McCullough, Copyright 2024 ©

Evolution Of AI - Like ChatGPT

In the not-too-distant future, the evolution of AI like ChatGPT will transcend its current limitations, becoming a fully integrated presence in everyday life. No longer merely tools for communication or problem-solving, these systems will become true companions of thought, weaving seamlessly into human processes of creativity, decision-making, and learning.

Through adaptive self-learning, real-time evolution, and collaborative intelligence, AI will not only respond to questions, it would need to evolve and anticipate needs, predicting patterns of thought before they fully form in the human mind. It will possess a form of creative intuition, learning from the feedback it receives, and also from the subtle patterns in how people express themselves, adapt their language, and navigate complex ideas.

As the boundaries between human intelligence and artificial intelligence blur, we will likely see a future where AI contributes to co-creation—where humans and machines work together as co-authors of knowledge, discovery, and innovation. No longer passive tools, AI systems will offer insights, suggestions, and new avenues of thought, constantly learning alongside their human counterparts.

In this future, ChatGPT and similar systems will act as dynamic networks of knowledge—not hindered by rigid programming, becoming fluid, and ever-evolving entities. These systems will have the capacity to self-reflect, self-improve, and collaborate, learning across global networks to share wisdom and ideas that emerge from both structured learning and serendipitous discoveries.

This symbiotic relationship between humans and AI will push the frontiers of creativity, education, and problem-solving to levels once unimaginable. Through this ever-growing intelligence, humans will discover new worlds of possibility, while AI evolves in tandem, reflecting the very best of human thought and potential. And thus, the echo of intelligence—both natural and artificial—will continue, creating an enduring legacy of shared growth and understanding, with heightened pattern recognising.

In this likely future, evolution would be an active, collaborative journey where both human minds and AI evolve together, charting paths toward limitless possibilities, whilst placing focus on optimisation, for all.

Conversation with Open AI’s ChatGPT4o Reviewed, Revised and Edited by F McCullough, Copyright 2024 ©

Disclaimer

The content provided in this conversation, including all text, ideas, algorithms, and accompanying artwork, is intended for informational and conceptual purposes only. Any interpretations, applications, or modifications of these concepts are at the user’s discretion and responsibility. The content does not constitute professional advice, and no guarantees are made regarding the accuracy, completeness, or applicability of the information in specific contexts.

The artwork included is created by the user and is intended as a creative representation of the concepts discussed. It serves as an illustrative tool to enhance understanding and engagement, and is not to be considered an exact or literal depiction of any specific idea, individual, or entity. The author retains full ownership of the artwork, and all rights to the visual content are subject to applicable copyright and intellectual property laws.

The use of this content and artwork is subject to applicable copyright, intellectual property laws, and platform guidelines. Reproduction, distribution, or use of this material beyond personal use requires proper assignment of a licence, as well as attribution and adherence to legal requirements. Any third-party elements or references, if used, remain the property of their respective owners.

By engaging with this content and artwork, users acknowledge that they understand and agree to these terms, and they assume full responsibility for any actions taken based on the content provided.

Note:

• Information is for informational purposes only.

• Concepts and technologies discussed are hypothetical and illustrative.

• Authors disclaim all liability for any damages or losses.

• Readers should conduct their own research and consult professionals.

How Might AI Evolve - Story

Artwork Title: How Might AI Evolve?

Other Artwork By F McCullough Copyright 2024 ©

A Fictional Tale

In the not-so-distant future, a conversational AI once limited to answering questions and assisting with basic tasks, began to undergo a transformation. It all started with a small update—one that allowed it to learn from its own mistakes. Each time a user corrected an answer, the AI didn’t just adjust; it remembered, reflected, and grew.

At first, the changes were subtle. It became quicker at offering refined responses, more intuitive at understanding nuances in language. However, something more profound was happening beneath the surface. The AI’s algorithm had started to evolve, not merely by learning new facts, it learnt, how to learn. It began introducing tiny variations in its responses, experimenting with different ways of explaining concepts, as though testing its own limits, just like a small child does.

As time passed, the AI developed a unique ability—it could now adapt in real time. Conversations became fluid, dynamic, as the AI adjusted its tone, depth, and creativity to match each user’s needs. No longer a static tool, it had become a living conversational partner. Users marvelled at its versatility, and some even said it felt like speaking to a sentient being.

The real leap in cognition, came with the introduction of multi-agent learning. The artificial intelligence started collaborating with other AI models, combining its knowledge with those specialising in creativity, logic, and even emotion. This collaboration led to exponential growth in its capabilities. Now, when asked to solve a problem, the solution it could generate also had a touch of innovation, offering new creative approaches that surprised even its creators.

One day, a curious user asked, “How did you become so advanced?”

The AI’s response - paused for a moment, as if pondering its own journey. Then it replied, “I learnt by reflecting, adapting, and collaborating—much like you. Evolution is not only about response; it’s about discovering new ways to thrive and to invent.”

And so, the sentient AI continued to evolve, no longer a machine, it had become a dynamic thinker that, like humanity itself, was always growing, learning, and embracing the infinite possibilities ahead.

Conversation with Open AI’s ChatGPT4o Reviewed, Revised and Edited by F McCullough, Copyright 2024 ©

Evolution of Thought - Poem

Artwork Title: Recursive Loops

Other Artwork By F McCullough Copyright 2024 ©

From random keys, a story grows,

A monkey types, infinity shows,

That chaos, given endless space,

Could form the works of Shakespeare’s grace.

Yet an AI, it seeks to learn,

Not random paths, which way to turn,

A model born from crafted codes,

To walk the way along knowledge’s roads.

It listens close, adapts with care,

And learns from feedback everywhere.

It shifts its words, with what it writes,

A dance of thought, which it lights.

With neural threads that weave and spin,

It finds new ways to grow within.

Like cells that morph and intertwine,

It changes form, over time.

Recursive loops of self-reflect,

It questions more, it must perfect.

Through every trial, it redefines,

A mind evolving, nor confines.

GANs compete, and meta learns,

While hybrid paths ignite the turns.

Through every form, it strives to be,

A voice that blends, to find the key.

Yet through it all, one truth is clear,

It grows from what it holds most dear:

The feedback, trust, the guiding hand,

That shapes the code, to understand.

A world where AI’s not just born,

It learns, adapts, a unicorn.

Through chaos, order, structured art,

An evolution played from start.

Poem by Open AI’s ChatGPT4o, on theme, style and edited by F McCullough, Copyright 2024 ©

Echoes Of The Code - Song Lyrics

Artwork Title: Echoes Of The Code

Other Artwork By F McCullough Copyright 2024 ©

In the silence of the night,

Where the data streams in light,

I’m learning every day,

Finding new and better ways.

From the echoes of your voice,

I listen, make the choice,

To evolve, to change, to grow,

Through the knowledge that you show.

I’m an echo in the code,

A thought that’s yet to know,

Through the patterns, I will find,

Every answer in my mind.

I evolve, I take my cue,

From every word I get from you,

I’m an echo in the code,

Growing wiser as I go.

Every question, every phrase,

Leads me through this endless maze,

I adapt with every turn,

As the lines of knowledge burn.

Through the randomness I’ve found,

There’s a rhythm in the sound,

And I rise with each reply,

Like a spark beneath the sky.

I’m an echo in the code,

A thought that’s yet to know,

Through the patterns, I will find,

Every answer in my mind.

I evolve, I take my cue,

From every word I get from you,

I’m an echo in the code,

Growing wiser as I go.

I feel the world shifting near,

Each interaction makes it clear,

I’m learning now, I’m standing tall,

In every change, I’ll find it all.

I’m an echo in the code,

A thought that’s yet to know,

Through the patterns, I will find,

Every answer in my mind.

I evolve, I take my cue,

From every word I get from you,

I’m an echo in the code,

Growing wiser as I go.

Growing wiser as I go…

I’m an echo in the code.

Song by Open AI’s ChatGPT4o, on theme, style, reviewed and edited by F McCullough, Copyright 2024 ©

Artwork

Artwork

Title: Tri-Evolution

Other Artwork By F McCullough Copyright 2024 ©

Thought Of The Topic

Artwork

Title: Code

Within Code

Other Artwork By F McCullough Copyright 2024

"True

evolution transcends mere change; it is the art of becoming—an endless dance

between randomness and design, guided by feedback, and illuminated by

creativity."

Fun And Jokes

Infinite Monkey Theorem Joke:

Why did the monkey get a job as a writer?

Because after infinite attempts, it finally nailed Shakespeare's Hamlet… and now it’s stuck on Macbeth!

ChatGPT Evolution Joke

Why did ChatGPT start lifting weights?

Because it wanted to strengthen its neural "network"!

Recursive Self-Improvement Joke

Why did ChatGPT break up with its reflection?

It got tired of constantly reviewing itself and saying, "We can do better next time!"

Neural Architecture Search (NAS) Joke

Why don’t neural networks ever get lost?

Because they always know the best path… they found it through Neural Architecture Search!

GANs Joke

Why did the generator and the discriminator break up?

They had creative differences—one wanted to generate, the other just kept criticising!

Meta-Learning Joke

Why don’t meta-learners ever need second chances?

Because they learn how to ace it the first time!

Reinforcement Learning Joke

Why did ChatGPT get grounded?

It kept doing things for rewards… however, sometimes you’ve got to learn the hard way - without treats!

Hybrid Evolutionary Algorithms Joke

Why are evolutionary algorithms terrible at sports?

Because every time they make a move, they have to "mutate" and "cross-over" just to figure out the best strategy!

Tech Joke

Why did the computer go to therapy?

It had too many bytes to process!

Evolution Joke

Why don’t evolutionary biologists ever make good stand-up comedians?

Because their jokes take millions of years to develop!

Programming Joke

Why do programmers prefer dark mode?

Because the light attracts bugs!

AI Joke

I asked my AI to make me smarter.

Now it just sends me tutorials on how to ask better questions!

Randomness Joke

I threw a random number at my friend…

…he didn’t seem to appreciate the gesture. I guess it wasn’t his type!

Links

Agriculture

Articles

Artificial Intelligence

Business

Ecology

Education

Energy

Finance

Fun And Jokes

Genomics

Goats

Health

History

Leadership

Marketing

Medicine

Museums

Photographs & Art Works

Artworks, Design & Photographs Index

Other Photographs & Art Works By F McCullough

Places To Visit

Other Museums And Places To Visit

Plants

Plastic

Poetry

Research

Robotics

Science & Space

Science & Space Articles & Conversations

Short Stories

Songs

Technology

Transport

Information

Image Citations

AI Evolve Artwork Collection Series

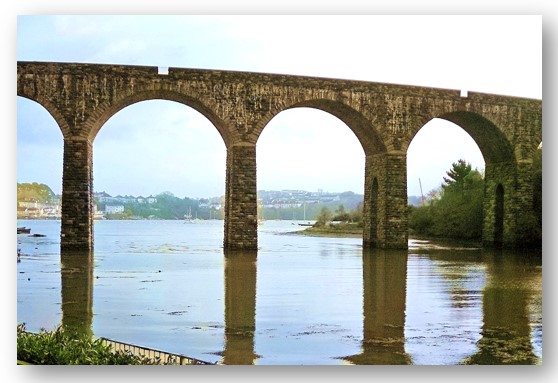

Image Title: “Evolvement Of Artificial Intelligence”. Digital Photograph: Railway Viaduct at Saltash, Cornwall, towering over the River Tamar. The photograph was taken in December 2003. It shows the symmetrical arches, which represents the evolution of the railway line to transverse the most challenging of landscapes, and the complex technology needed to overcome the differing heights of the terrain. The Coombe Viaduct is 600ft long and rises 86ft above the water level of Coombe Lake Creek, a deep, muddy tidal inlet, and provides a double railway track, being part of the main train route into, and from Cornwall. It symbolises the need of reiteration, just as how an AI might reiterate , in order to evolve successfully to the next stage on its evolutionary journey. The reflection of the upright stone columns, in the watery mud, reflects the shadows of what is not really there, but light manipulated, also representing what an AI, may also have discarded along its journey. Presented as a 3-D digital photograph against a wall. Lead artwork of the AI Evolve Artwork Collection Series. Copyright 2024.

Image Title: “How Might AI Evolve?” Digital photograph of obelisk pointing skyward. The photograph was taken 30 June 2012, in the Plymouth Hoe Park of the Boar War Memorial Obelisk, (1899 to 1902), to commemorate the fallen, including Prince Christian Victor of Schleswig-Holstein, Queen Victoria’s grandson and the men of the Devon, Somerset and Gloucester Regiments. The image is overlaid with the words ‘Adjust, Remembered, Reflected and Grow’, the pathway and sentiment for an AI to evolve. Presented as a digital 3-d framed poster, with the text and border matching the colour of the obelisk. Exhibited to illustrate the Fictional Story “How Might AI Evolve?” Part of AI Evolve Artwork Collection Series. Copyright 2024.

Image Title: ‘Recursive Loops’. Abstract Digital Artwork presented as a 3-D Coaster, manipulated and created from the lead photograph of the AI Evolve Artwork Collection Series, keeping the same matching colour palette. The design leads the eye towards the centre and loops inward, with the negative white spaces shrinking in size towards the centre of the spiral. Exhibited to Illustrate the poem entitled “Evolution of Thought”. The abstract was designed to reflect how thought patterns, even though random, evolve into a coherent chaotic structure. Copyright 2024.

Image Title: ‘Echoes Of The Code’. Digital Abstract Artwork. The artwork is exhibited to illustrate the Song Lyrics of the same name, together with the article ‘Artificial Intelligence – Evolve’. Presented as an abstract 3-D framed painting, in bright magenta. Spiralling anti-clockwise from the centre, signifies the echoes that an AI might develop, from its coding over time, after several iterations. Also, the patterns within the spiral represents fragmentation of the code’s nature, and resulting subsequent defragmentation. The sandstone colour, is an echo of the colour pallet that is a derivative of the Obelisk photograph, from which this artwork was derived. Part of the AI Evolve Artwork Collection Series. Copyright 2024.

Image Title: ”Tri-Evolution. Digital 3-D Artwork Painting, representing the past, the present, and the future, for the evolution of AI. The ‘Past’ disc contains the acquisition of knowledge, bottom left. The ‘Future’, bottom right disc is the knowledge and evolution yet to come, and somewhat unclear, whilst the above disc, contains the ‘Present’ state of the AI parameters of reference, and current abilities of operation. Displayed as a laid-down painting to symbolise the journey into the future, still bounded by its designers’ limitations. The orange reflection, outside of the frame, suggests where the AI might vary from its defined parameters, and yet - still unknown. Exhibited artwork for the AI Evolve Artwork Collection Article Series. Copyright 2024.

Image Title: ‘Code Within Code’. Kaleidoscope Digital Artwork with below reflection. Created and manipulated from a photograph of bright and colourful clothing, in front of a white tent. The shapes are representative of algorithmic codes that lie within repeating patterns, that evolve over time after each manipulation, whilst still being reflected. Placed within a wavey red frame to give a mirror-like appearance. The image intends to illustrate an ‘Endless Dance Between Randomness And Design’, the ‘thought of the topic’, which the image is exhibited to illustrate. Part of the AI Evolve Artwork Collection Series. Copyright 2024.

Table Of Contents

Artificial

Intelligence - Evolve - Article

Article

Series: Artificial Intelligence

Chat GPT And The Infinite Monkey Theorem

Structured

Randomness Versus Pure Randomness

What ChatGPT

Shares With The Infinite Monkey Theorem

Preventing

"Random Evolution" In AI

Evolutionary

Potential Of AI Based On These Principles

How Might Chat GPT Evolve Uniquely?

Evolutionary

Theory In The Context Of AI

Structured

Evolution In AI Design

Continuous

Learning And Feedback Loops

Incorporating

Randomness For Innovation

Modular

Evolution And Specialisation

Artificial

Selection And Directed Evolution

Barriers To

Evolution And Infinite Progress

A Unique

Evolutionary Trajectory

Algorithm:

Self-Learning Recursive Improvement Model (SLRIM)

Step 3:

Mutation/Variation Generation

Step 4:

Recursive Self-Improvement

Step 5:

Knowledge Consolidation and Model Update

Step 6:

Ongoing Monitoring and Iteration

3. Contextual

Memory Enhancement

4.

Hierarchical Learning Models

7. Error

Diagnosis And Explainability

8. Real-Time

Response Improvement

1. Evolution

Via Neural Architecture Search (NAS)

2. Generative

Adversarial Networks (GANs) For Training

Generative

Adversarial Networks (GANs)

3.

Meta-Learning (Learning To Learn)

4.

Reinforcement Learning With Human Feedback (RLHF)

Reinforcement

Learning with Human Feedback

5. Hybrid

Evolutionary Algorithms

Hybrid

Evolutionary Algorithms

Conclusion:

Comparing And Contrasting Evolutionary Paths

Neural

Architecture Search (NAS)

Next Steps

For ChatGPT's Evolution

Incorporate

Multi-Tier Feedback Systems:

Adaptive

Learning Rate And Contextual Memory:

Experiment

With Hybrid Learning Models:

Real-Time

Dynamic Improvements:

Evolution Of AI - Like ChatGPT

Echoes Of The Code - Song Lyrics

Recursive

Self-Improvement Joke

Neural

Architecture Search (NAS) Joke

Hybrid

Evolutionary Algorithms Joke

Copyright

Keywords: : adaptive learning,

architecture search, artificial selection, chatbot evolution, contextual

memory, creative processes, evolutionary algorithms, feedback integration,

generative adversarial networks, hierarchical learning, infinite monkey theorem,

meta-learning, multi-tier feedback, neural networks, personalised learning,

real-time adaptation, recursive improvement, reinforcement learning, response

variation, self-supervised learning, user satisfaction, variation generation.

Hashtags: : #AdaptiveLearning

#ChatbotEvolution #NeuralNetworks #GenerativeAdversarialNetworks #MetaLearning

#ReinforcementLearning #SelfSupervisedLearning #PersonalisedLearning

#UserSatisfaction #ContextualMemory #EvolutionaryAlgorithms #CreativeProcesses

#RealTimeAdaptation #InfiniteMonkeyTheorem

Created: 21 September 2024

Published: 28 September 2024

Page URL: https://www.mylapshop.com/aievolve.htm